Starting Pixel Chat - 4th March to 17th March 2024

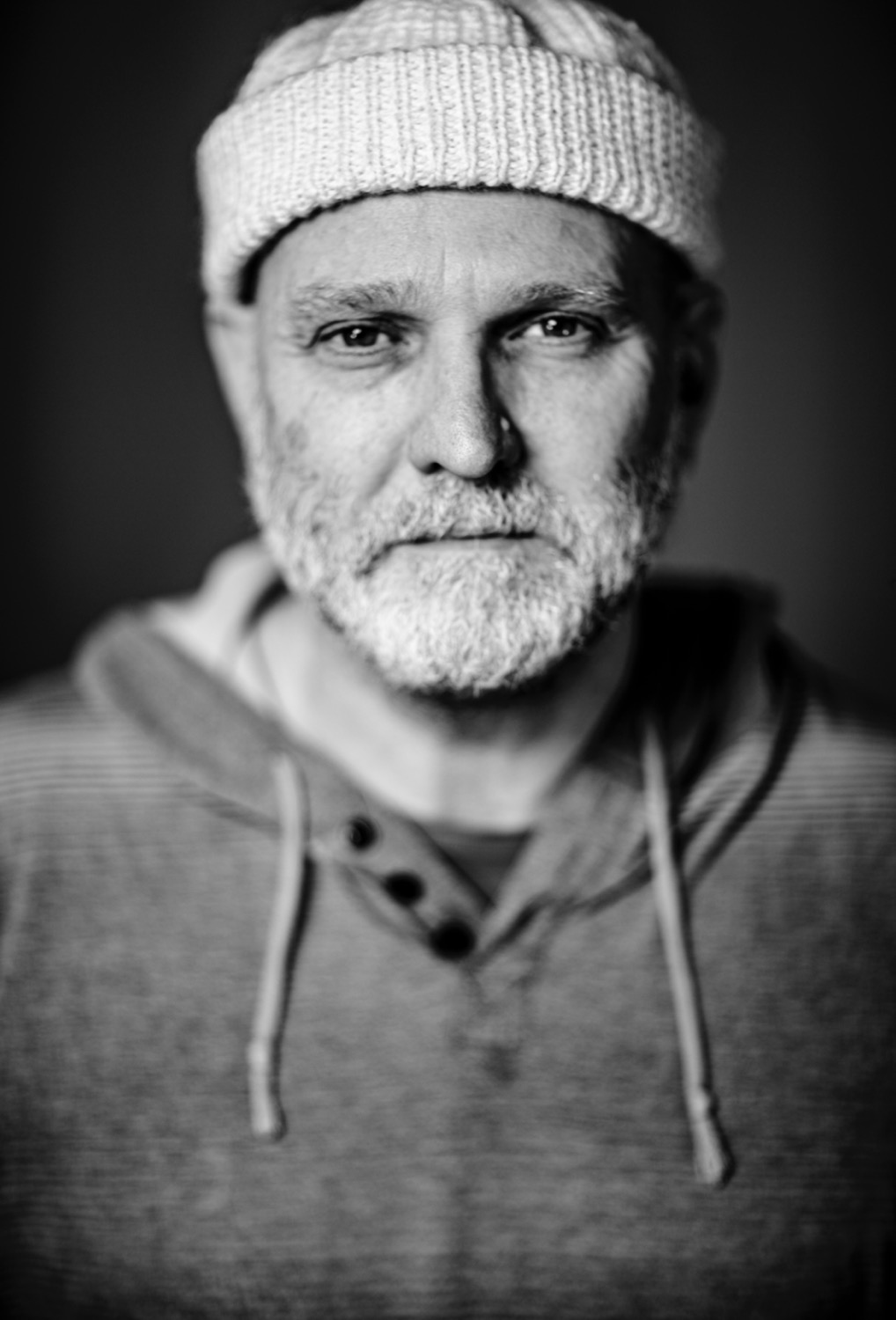

- Rob Chandler

- Mar 17, 2024

- 7 min read

Updated: Mar 24, 2024

This summary is created using a ChatGPT "Chat Summariser" - expect the unexpected, inaccuracies but good grammar.

Executive Summary

This comprehensive conversation delves into the nuances of enhancing virtual production, especially within forest or outdoor nature settings. Participants share invaluable technical insights, experiences, and recommendations spanning software applications, hardware optimizations, and best practices. Key areas of discussion include:

Performance Optimisation: Strategies include building only essential elements, meticulous lighting and asset detail management, and utilising Unreal Engine's advanced features like Nanite for surface modeling and strategic disabling of processes to boost efficiency.

Software and Hardware Insights: Practical advice on Unreal Engine settings, leveraging Perforce on various platforms with a nod to Digital Ocean for its simplicity and cost-effectiveness, and using wireless genlock and timecode solutions for seamless camera synchronisation.

Storage and Rendering: Discussions extend to managing draw distances, lighting for improved rendering, and adopting NAS storage with RAID configurations for robust media handling. Version-specific recommendations for Unreal Engine underscore the importance of selecting the right tools for specific production needs.

Virtual Production Techniques and Technologies: The conversation branches into various facets of virtual production, including leveraging Unreal Engine's flexible modules, motion capture integration, dealing with sequencer glitches, and addressing lighting challenges in virtual environments. Attention is given to dynamic range management, particularly when shooting with LED volumes, and the strategic use of blue/green screens alongside LED setups for complex lighting scenarios.

3D Reconstruction and LiDAR Scanning: Emphasis on technologies like Gaussian Splatting (GaSp) and Neural Radiance Fields (NeRF) for virtual production, sharing insights on tools, workflows, and best practices for capturing and reconstructing 3D models. Discussions cover professional LiDAR scanning, comparisons between automatic mode clarity, and the integration of high-fidelity scans into Unreal Engine.

AI and Motion Capture in Filmmaking: The role of AI in filmmaking garners debate, particularly regarding its impact on creative integrity and job roles within the industry. The introduction of new motion capture technologies and the potential for AI to streamline production workflows are explored, alongside concerns about AI's influence on traditional filmmaking roles.

Community Collaboration and Learning: The dialogue includes announcements of workshops, events, and projects aimed at fostering collaboration and knowledge sharing within the virtual production community. Topics range from sustainability in production to the integration of VR, AI, and digital humans into creative workflows, highlighting the sector's dynamic evolution and the importance of adapting to technological advancements.

Animation and Digital Humans: Conversations touch upon procedural animation, the optimization of keyframes in Unreal Engine, and the evolution towards 4D technology in digital human reproduction. Community members share resources and tips for animation techniques, emphasizing collaboration in tackling software-specific challenges.

Overall, these discussions encapsulate a rich tapestry of technical and creative insights, underscoring the virtual production community's commitment to advancing the field through technology, collaboration, and continuous learning.

Main Chat

The conversation is centered around optimising forest or outdoor nature environments for virtual production, with participants offering various technical tips and discussing their experiences with relevant software and hardware. Key points include:

Building only what is necessary and being mindful of lighting and asset detail to maintain performance.

Advice on using specific Unreal Engine features and settings for efficiency, like disabling certain processes at a distance and using Nanite for surface modeling.

Discussion on managing draw distances and lighting to improve rendering performance.

Technical considerations for using Perforce on different hosting platforms, with a recommendation for Digital Ocean for ease of setup and cost-effectiveness.

Various tips on managing and optimizing virtual production environments, including the use of wireless genlock and timecode solutions for camera synchronisation.

Exchanges about Unreal Engine versions for specific use cases, with recommendations for version 5.3.1 or 5.3.2 based on needs.

A broader discussion on storage solutions for media, with recommendations for NAS storage using RAID configurations and mentions of specific brands and models for high-performance requirements.

Links to resources about a flexible module and Unreal Engine's ndisplay quick launch tool.

Discussions about attending GDC (Game Developers Conference) and GTC (GPU Technology Conference).

Queries about operating Vive systems wirelessly and dealing with sequencer glitches in Unreal Engine, including a specific mention of a "DWM Sync Event error" in Stage Manager.

Suggestions for dealing with harsh sunlight effects in virtual scenes, touching on the use of skylights, directional lights, and materials to achieve desired lighting effects.

A mention of Unreal Engine updating its pricing and support options, including the cost of Epic Direct Support for companies purchasing more than ten Unreal Subscription seats.

Exploration of using VR and motion capture equipment with Unreal Engine, and considerations for color grading on set when shooting with LED volumes.

Discussion about potential Unreal Engine and AI integration, with a critique on claiming AI authorship without crediting the original creators' work.

Suggestions for managing dynamic range when shooting with LED volumes, including technical considerations for improving LED wall performance and maintaining consistent exposure.

A brief discussion about using blue or green screens in combination with LED volumes for more complex lighting setups, and the value of having a colorist on set during virtual production shoots.

Various technical and creative suggestions for improving virtual production workflows, including potential testing of novel ideas to extend the dynamic range and improve the realism of LED wall backgrounds.

Overall, the conversation spans technical discussions about optimizing virtual production workflows, dealing with specific Unreal Engine issues, exploring new technologies for motion capture and virtual reality integration, and addressing the challenges of shooting with LED volumes.

Location Capture

The discussion focuses on various aspects of 3D reconstruction, LiDAR scanning, and the utilization of technologies like Gaussian Splatting (GaSp) and Neural Radiance Fields (NeRF) for virtual production and other applications. Key points include:

Introduction of PostShot, a workflow for generating headshots and location scans.

Comparisons and preferences between Polycam’s automatic mode and Luma for clarity in GaSps.

Recommendations and guidelines for capturing images for 3D reconstruction, with links to user manuals and advice from companies like Volinga.

Technical discussions on limitations and best practices for using web services and desktop versions for frame processing and hyperparameter adjustments in 3D model creation.

Interest in professional LiDAR scanning devices for research and development projects, with suggestions for device rentals and companies offering measured survey services.

Explorations into the potential of integrating high fidelity scans into Unreal Engine for virtual production, with examples and offers to share relevant work.

Announcements and discussions on new partnerships and launches related to capturing and processing techniques like NeRF, 3DGS, and 4DGS.

Recommendations for channels and individuals to follow for the latest updates on GaSp, NeRF, and related technologies.

Highlights of recent advancements and applications in machine learning models for processing 4D data, including motion capture, texture, and lighting.

Overall, the conversation touches on the exploration, application, and development of advanced 3D capture and reconstruction technologies, with a focus on improving workflows for virtual production and beyond.

Show and tell

The discussion encompasses a range of topics related to virtual production (VP), motion capture, AI in filmmaking, and technological advancements in capturing and rendering environments and actions:

LTX Studio's ability to generate entire productions from a single prompt, sparking debate about the implications of filmmaking dominated by AI and committees.

Concerns and discussions about the balance between AI's role in filmmaking and maintaining the integrity of creative processes.

Introduction and interest in Mo-Sys's CapturyVP motion capture technology.

Various links to videos and articles showcasing demos and projects in virtual production and AI.

Discussions about the pros and cons of AI in creative industries, focusing on how it might replace traditional jobs but also create new opportunities.

The potential of AI to automate workflows and its impact on different aspects of production, including design, editing, and commercials.

The importance of training and adapting to new technologies while ensuring people are not left behind due to advancements.

Specific technological tools and rigs used in virtual production, such as camera tracking systems and motion control rigs for car shaking.

Requests for advice on leasing agreements for VP stage hires and specific clauses important for such agreements.

A mention of new tools for converting 2D photoshop images into 3D models, with skepticism about their effectiveness.

Overall, the conversation reflects a blend of excitement and concern over the rapidly evolving role of AI and technology in creative processes, with a focus on how these tools can enhance filmmaking while also challenging traditional roles and workflows.

Events

The discussion revolves around various events, workshops, and projects related to virtual production (VP), with a focus on sharing knowledge, experiences, and opportunities for collaboration within the community. Key points include:

Rob Chandler announces the soft launch of 'Open Lens Sessions' for crew members to test registration and show flow, aiming for a hard launch with a fee for participation.

Members express interest in attending and observing these sessions, highlighting the demand for such hands-on experiences.

A call for case studies for the MP&T Show in London is made, with several members expressing interest in contributing real-life shoot experiences not under NDA.

The community discusses the impact of AI on content creation and job roles, debating the balance between using AI as a tool and preserving human creativity and decision-making.

Offers of collaboration and invitations to events, such as SXSW in Austin, the Media Production and Technology Show, and a virtual production seminar in New York, are shared.

Concerns about the recent news of Anna Valley entering administration and discussions about color calibration and gamut in VP projects highlight technical challenges and industry changes.

A workshop specifically for writers interested in incorporating VP, VR, AI, and digital humans into their work is announced, emphasizing the interdisciplinary nature of modern content creation.

A conference on "VIRTUAL PRODUCTION: how to reduce your budget & sustainability impact" at SERIES MANIA Forum in Lille is announced, showcasing efforts to combine sustainability with cost efficiency in VP.

Overall, the conversation reflects the growing interest and evolving landscape of virtual production, highlighting opportunities for learning, collaboration, and adaptation to new technologies within the film and media production industry.

Human Digital

The conversation centers around various topics related to animation, procedural animation, digital humans, and keyframe optimization in Unreal Engine. Key points include:

A video linked by Rob Chandler discussing technology that interprets human speech.

A LinkedIn post shared by Michael Davey about an event related to gaming.

An individual experimenting with procedural animation to recreate a scene from "Train to Busan" using Unreal Engine.

A discussion on digital human reproduction as 3DGS with a move towards 4D technology.

Mention of using Atoms Crowd for crowd simulation.

A question about automating the removal of unnecessary keyframes between animation sequences to streamline the animation process.

Suggestions include using filters in Curve Editors across various software (Unreal, Maya, MotionBuilder) to simplify animation data/curves and specifically the use of a "Simplify" filter in Unreal Engine's Sequencer Curve Editor for cleaning up animation sequences without manual effort.

A YouTube link provided by Jake Fenton, potentially related to one of the discussed topics, but without explicit context.

The conversation shows the community's collaborative effort to share knowledge and solutions regarding animation techniques, software features, and procedural animation challenges.

Comments