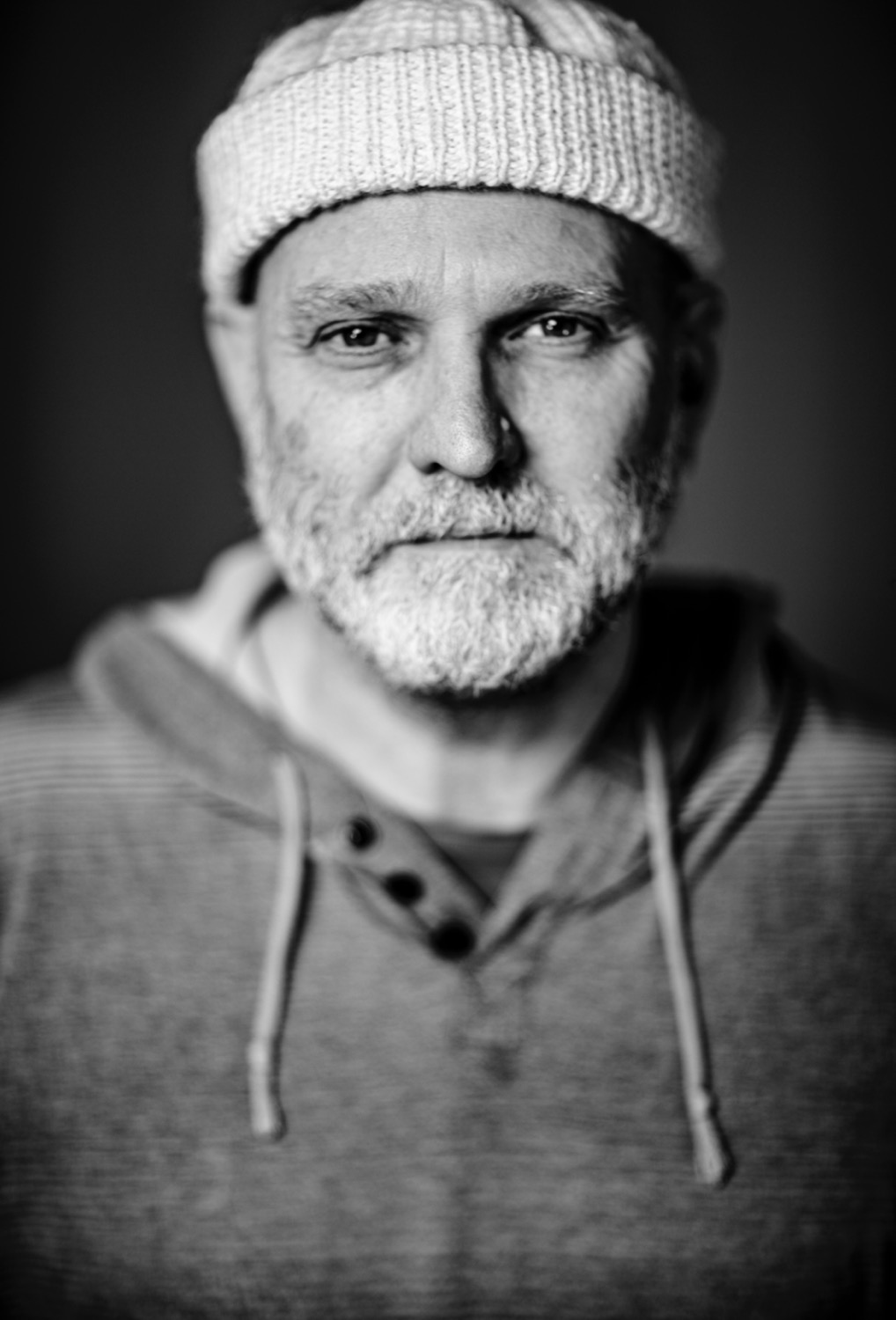

Starting Pixel Live 24: Dan Chapman on the Convergence of Games, Films, and Live Events

- Rob Chandler

- Nov 29, 2024

- 4 min read

At Starting Pixel Live 24, Dan Chapman, Framestore’s product manager for real-time and virtual production, shared insights into the technological convergence of games, film and live events that are redefining how we create and consume media. In an expansive talk, Chapman detailed how advancements in game engine technology, VR, AR, and machine learning are transforming storytelling, bridging the gap between film, gaming, and live events. This convergence, he explained, signals a shift toward more immersive and interactive entertainment experiences that reshape audience engagement.

From Virtual Production to Real-Time Filmmaking

Chapman began with a look at virtual production’s evolution, tracing it from its early use in films like Gravity to today’s cutting-edge game-engine-driven workflows. At Framestore, in-house tools like “Farsight” and “Fuse” streamline VP for large-scale productions, allowing for seamless integration of real-time technology across diverse devices. Framestore’s recent project, Flite, exemplifies this new approach. Directed by Tim Weber, the 15-minute hybrid film was a test case for using Unreal Engine as a primary rendering tool, merging traditional VFX with real-time flexibility. This blend of CG and live-action assets showcased the potential of real-time production to revolutionize film workflows, a development Chapman described as “essential for today’s speed and creativity demands.”

Platforms of the Future: A Unified Media Landscape

Chapman moved from production tech to broader industry shifts, particularly the convergence of video games, social media, and live events. Popular gaming platforms like Fortnite and Roblox are evolving into social hubs where players not only engage in gameplay but participate in virtual concerts, fashion shows, and even educational events. This evolution, he noted, signals a move towards “the 3D internet,” where users interact in persistent, immersive environments that blend user-generated and professional content.

Games have shifted from static products to live services, illustrated by Grand Theft Auto’s 12-year run, sustained by continuous updates. Chapman explained that this model is economically viable because it encourages player engagement and in-game spending over extended periods. Furthermore, user-generated content drives engagement and content diversity, reducing production costs while expanding creative possibilities.

The Role of Major IP Holders and User-Created Content

As interactive platforms evolve, intellectual property holders are keen to capitalize. Disney’s recent investment in Epic Games and the establishment of creator platforms within Fortnite are examples of how brands are leveraging virtual worlds for new levels of audience interaction. In these spaces, content creation tools allow users to build experiences with branded assets, fostering creativity while maintaining brand control. Chapman envisions these tools as gateways to broader user engagement, where players become creators, continuously refreshing content within branded ecosystems.

From Passive Consumption to Active Participation

Chapman highlighted that the convergence is not just about technology but also cultural and economic shifts. The emergence of platforms that encourage active participation reflects a new content economy, where engagement, community, and revenue-sharing models are crucial. He likened this evolution to previous shifts brought on by the internet, which changed audience expectations from passive viewership to active participation. Today, platforms like Twitch and YouTube bring games into the mainstream, where live events like Fortnite’s The Big Bang concert have millions of viewers.

Chapman emphasized that the same forces are driving traditional entertainment platforms like Netflix to experiment with gaming and interactive content. In this context, he suggested, success will come to platforms that best blend user experience, social connection, and immersive engagement, shaping a unified ecosystem where audiences and creators are in constant interaction.

AI and Machine Learning: The Future of Content Creation?

AI and machine learning, Chapman hinted, could add a new layer to this ecosystem. Although tools like OpenAI and MetaHuman Animator are advancing creative workflows in virtual production, he remains cautious about AI's ability to replicate human-driven content creation fully. While AI might accelerate the creation of user-generated assets, Chapman sees real-time engines and interactive platforms as the primary drivers for this convergence in the near future. “For now,” he remarked, “humans still want to connect with other humans through shared passions,” signaling a demand for content that feels personal, authentic, and connected to real communities.

The Road Ahead: A Collaborative and Immersive Media Ecosystem

Chapman’s vision for the future of entertainment is one where the lines between games, films, and live events are increasingly blurred. He believes this convergence, fuelled by virtual production and interactive technology, is reshaping the media landscape into one vast, immersive ecosystem. As companies like Framestore develop open standards for 3D content and embrace transmedia pipelines, they’re positioning themselves for a future where audiences can engage with a story across platforms, from their favourite game to a virtual event to streaming media.

For creators, this means new opportunities to experiment with content that transcends traditional formats, driven by interactive, community-centric experiences. For audiences, the future promises a blend of entertainment, interactivity, and social engagement that offers endless ways to connect and engage with the stories they love. As Chapman put it, “Virtual production isn’t just a tool; it’s a transformative force bringing us closer to a unified media experience.”

Dan can be found here: www.linkedin.com/in/dan-chapman-td

コメント