Starting Pixel - Weekly Summary 24th March 2024

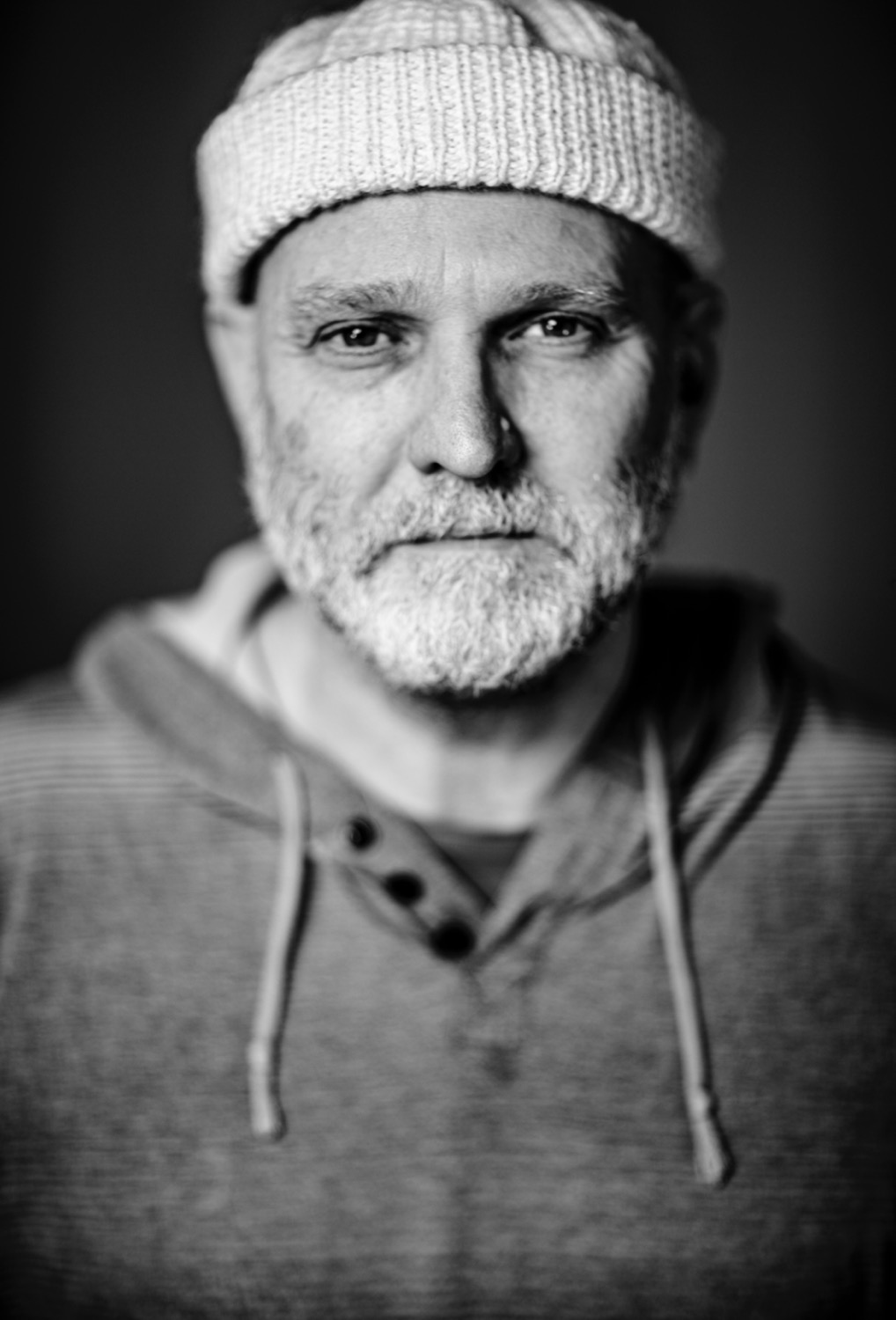

- Rob Chandler

- Mar 24, 2024

- 7 min read

AI: Niagara, Octane, Digital Human

Executive Summary

The professional discussions encompass a range of technical topics, demonstrations, and environmental considerations, touching on advancements in virtual production, rendering technologies, and the integration of AI in character creation. Key points include:

Virtual Production (VP) Technologies: Conversations started around a demo visit at BSC, with particular interest in the Lightcraft Jetset app for basic VP use cases. Discussion around a wriggling graphic speculated on the use of Niagara and forced depth of field (DOF) effects, highlighting the app's potential in greenscreen VP and its capabilities for offline use, despite some speculation about live session support.

Rendering Technologies and Environmental Considerations: Nvidia's advancements in power efficiency and inference capabilities were discussed, alongside Render/Otoy Octane, a distributed rendering solution. The environmental impact of virtual production, specifically the CO2 emissions comparison between LED walls and traditional location shoots, was explored, underlining the potential CO2 savings of virtual production.

Community Engagement and Technical Challenges: Requests for information on LED volumes in specific locations led to a sharing of resources and studio links. Technical discussions included troubleshooting LED wall issues, with suggestions focusing on GPU temperatures and genlock issues. Queries about AI specialists and controlling AI advancement prompted discussions on the EU AI Act and technology's impact on industry practices.

Events and Hiring: Notices about hiring by Lux Machina and Sony Pictures for technical specialists and Unreal Engine Engineers, respectively. Announcements about events, including a Virtual Production Artist Residency with the University of Galway, and in-person events and webinars by VES London on AI tools, were highlighted. Furthermore, discussions on OpenUSD's role in the evolution of film and game pipelines by Ubisoft and Stellar Creative Labs indicated a keen industry focus on integrating advanced technological workflows.

Digital Human and AI Characters: NVIDIA's announcement on digital human technologies to bring AI characters to life was shared, with Convai and Inworld mentioned as notable options for creating AI characters. A comparison of four 3D AI character engines on LinkedIn pointed to significant advancements in artificial intelligence and innovation in character creation.

Main Group

A discussion unfolded among professionals regarding various technical topics and demonstrations. The conversation began with a link to a demo and questions about who had visited it at BSC. Interest was shown in the Lightcraft Jetset app for basic virtual production (VP) use cases. There was curiosity about how a particular wriggling graphic was created, speculating on the use of Niagara and forced depth of field (DOF) effects. Participants shared their excitement and observations about the Lightcraft Jetset app, describing it as smart and stable. The conversation touched on the app's potential in greenscreen VP applications and the endless possibilities it offers. Some debated whether it supported live sessions, with clarification that Jetset is for offline use, but another software was mentioned for live sessions, though its name was not recalled.

Attention shifted to rendering technologies and presentations at the Nvidia GTC Keynote, including discussions about Nvidia's advancements in power efficiency and inference capabilities, costing billions in development. The conversation also mentioned Render/Otoy Octane, a distributed rendering solution involving significant figures in the industry.

Participants shared links to resources and tools for color management and virtual production efficiency, including a comprehensive life-cycle analysis comparing virtual and physical production methods. The conversation explored the environmental impact of virtual production, comparing the CO2 emissions of LED walls versus traditional location shoots, highlighting the potential CO2 savings of virtual production.

The discussion encapsulated a blend of technical curiosity, environmental considerations, and the exploration of new technologies in virtual production, rendering, and environmental sustainability within the film and television industry.

One participant mentioned using ChatGPT to calculate the CO2 emissions of an LED wall compared to driving, suggesting that the LED might be more environmentally friendly. Another shared insights from a BAFTA event on sustainability, noting a lack of awareness among Generation Z. A link to a live event on YouTube was shared, prompting discussions on the sustainability of flying versus not flying for climate change reasons, with opinions on the industry's broader environmental challenges.

There was a request for information on LED volumes in specific locations, with various members providing links to studios in Hamburg, Berlin, and Prague. Some corrections and additional suggestions were made, including contact information for further inquiries. A reminder about group chat etiquette was issued, stressing the importance of personal privacy and appropriate channels for certain discussions.

Technical issues with an LED wall were discussed, with suggestions on troubleshooting potential causes such as GPU temperatures and genlock issues. Queries about AI specialists and policies for controlling AI advancement were raised, leading to discussions on the EU act on AI and the impact of technology on the environment and industry practices.

New members introduced themselves, sharing their backgrounds and interests in VP and environmental sustainability within the film and television industry. The community expressed appreciation for the supportive and informative nature of the group, with mentions of potential expansion and the creation of a Chicago chapter.

Location Capture

The conversation revolves around various technical advancements and resources shared within a professional community. One participant shared a link to a video captured with a Humane AI wearable device, providing insight into its capabilities. Another shared knowledge about Gaussian Splatter, explaining why it's termed so through a YouTube link.

A significant contribution came from one individual who announced the release of the Igelkott Native 16K360 test and training package, which includes over an hour of driving plates designed to test hardware and workflows, available for modification in framerate and format by users. This was complemented by a YouTube playlist for further insights.

Queries about the capture rig's specifics were addressed, including camera and lens specs, though the individual could only publicly discuss file/point of view (POV)-related questions. The footage was shot in XOCN-XT, 25fps, with a camera height of 140cm, exported in Slog3, but not S-Gamut3.Cine as clarified upon inquiry.

There was also a note about an issue where the plates lost significant data weight in the process, with plans to release new files to rectify this. Lastly, a link to MVSplat, a tool for efficient 3D Gaussian Splatting from sparse multi-view images, was shared, providing resources for further exploration in this field.

Back Chat

A series of discussions and shared resources unfold among professionals in a technology-focused group, covering various topics from artificial intelligence (AI) to virtual production (VP) and industry trends. Highlights include:

A link to a Business Insider article about an AI video booed at SXSW.

A member visiting LA seeks to meet with fellow group members.

Discussion about NVIDIA's keynote, including expectations on new Studio laptops, ventures into VP, and cloud announcements.

Links to resources and videos regarding AI developments, including GPT-2 implemented in an Excel sheet and tests on RED V-RAPTOR X cameras.

OpenAI’s CTO was grilled about the AI tool Sora.

Unreal Engine 5.4 release announcement and discussions about its implications for film production and VP.

Concerns and observations about the lack of mention of VP in recent announcements and the potential future of VP without explicit marketing support from companies like Epic.

New members introduce themselves, sharing their backgrounds and excitement about joining the group.

A discussion about the state and future of UE in VP, with optimism about the technology’s impact despite shifts in company focus.

Debate over the use of AI in content creation, particularly concerning ethics, legality, and impact on traditional roles.

An extensive list of resources and frameworks related to AI regulation and policy, both in the EU and globally.

Personal testimonials and expressions of value for the group, highlighting opportunities for learning, networking, and professional growth within the virtual production and AI sectors.

Commentary on the dominance of traditional energy sources and a mention of upcoming AI tools for 3D world generation.

Show and Tell

A link to a YouTube video was shared without further details.

A project titled "Antroposen" by the band maNga was produced using Unreal Engine's Virtual Production, integrating real-time camera movements, sound, motion, and lighting within Unreal Engine.

Tim Kang's explanation of white point camera and wall white point calibration was highlighted as valuable.

The importance of adopting the "Complex Version" for white point calibration and the usefulness of specialized tools like OpenVPCal now integrated into ASSIMILATE was discussed.

There was a call for better education and practices in color management to prevent bad experiences and technical misunderstandings in VP.

The lack of excitement around color pipeline topics and the need for robust color management was acknowledged.

Assimilate's automation of color calibration using SDI (LogWG) feed was mentioned as a significant advancement.

Discussions on the necessity of color management for achieving realistic and clean images in VP, and how proper calibration can enhance realism.

Sony's solution for color calibration was mentioned, along with an interest in a method using HS scope for quick and easy calibration.

The importance of robust color management was emphasized for accurate color rendition and to minimize post-production adjustments.

A summary highlighted the goal of robust color management is to ensure accurate color rendering in-camera and in UE scenes to match pre-production visuals closely.

The importance of color calibrated pipeline, even beyond UE, to ensure accurate color representation for VAD artists and plate playback.

Discussions on different profiles for direct view versus emissive lighting and workflows that factor in viewing angles within the frustum.

A BTS and interviews video from a series filmed in October/November was shared, with a mention of speaking in the video from 3:40.

A link to an Instagram reel was shared, posing the question of whether the content was AI or CG, suggesting the quality was very good.

Events, Training and Job Opportunities

Lux Machina is hiring a System Technical Specialist based in LA.

Sony Pictures is looking for an Unreal Engine Engineer for tech development in Culver City.

A session on implementing Omniverse for cinematic content production is highlighted as crucial, explaining the use of lighting physics, cameras, and USD workflow for collaboration and asset creation.

The session requires free registration and is deemed highly relevant to GTC presentations.

Virtual Production Artist Residency announced with the University of Galway, offering a 3-4 weeks residency with a 12k budget for Virtual Production practitioners from outside of Ireland, aiming to facilitate expertise sharing, collaboration, and professional development. The deadline for application is March 31, 2024.

VES London is organizing in-person events and webinars focused on various AI tools, accessible to VES members or those who know a member.

Stellar Creative Labs hosting a virtual, free event on the practical implementation of OpenUSD for feature and episodic productions, scheduled for Thursday, March 28th at 2pm PT.

Ubisoft hosting a virtual, free event discussing how OpenUSD supports the evolution of film and game pipelines at Ubisoft, scheduled for Tuesday, March 26th at 8am PT.

Digital Human

NVIDIA announces digital human technologies to bring AI characters to life.

Convai and Inworld are identified as two very good options for creating AI characters.

A LinkedIn post compares four 3D AI character engines, highlighting advancements in artificial intelligence and innovation in character creation.

Comments