Weekly Summary 8th April 2024

- Rob Chandler

- Apr 7, 2024

- 10 min read

Updated: Jun 2, 2024

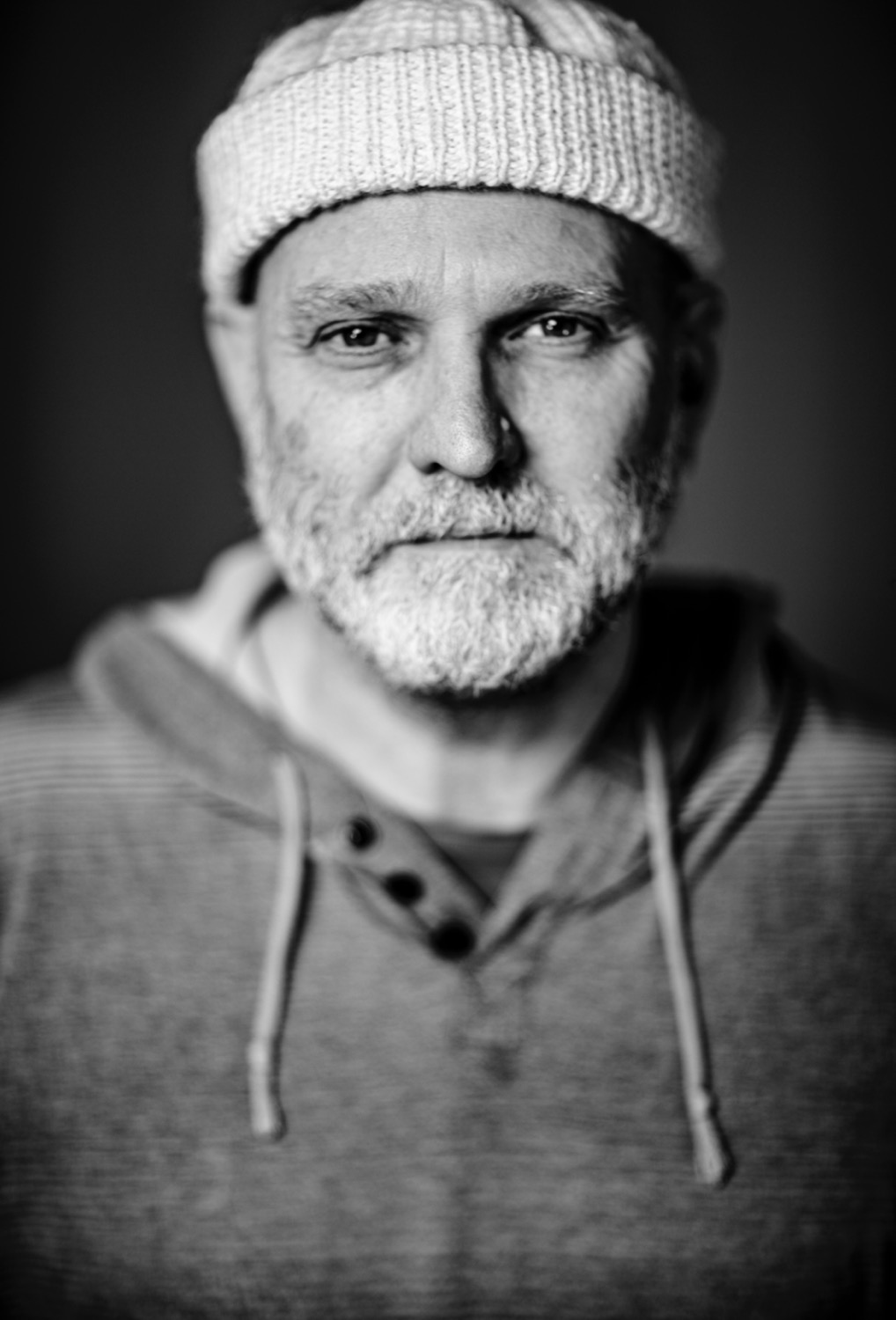

AI: realistic image of an AI robot taxman talking to a film director

This summary has been produced with ChatGPT, please assume there will be errors and omissions as no one is perfect

Executive Summary

UK Tax Incentives for Films: Detailed insights were shared on the new tax credits system in the UK, notably the 'indie tax credit' offering 40% tax relief for UK-qualifying films with budgets up to £15m. Additional incentives include a 5% increase in tax credit for visual effects and a significant relief on business rates for studio facilities, aiming to bolster the domestic production sector.

AI in Creative Industries: The discussion navigated the complexities of AI use, debating the ethical implications and the balance between innovation and potential issues such as equity, legality, and intellectual property. The conversation highlighted both the challenges and opportunities AI presents, with a call for a more structured dialogue on its role in creative industries.

Virtual Production Technologies: Participants exchanged information on various technical aspects of virtual production, including camera tracking apps, software compatibility with Unreal Engine, and rendering techniques. The discussions reflected a keen interest in exploring and integrating new technologies to enhance production quality and efficiency.

Networking and Job Seeking: Advice was shared on networking platforms and strategies for job-seeking within the industry, with recommendations for platforms like Talent Manager and caution advised against potential misuse of WhatsApp groups in light of investigations into cartel behavior in media.

Software and Hardware Discussions: The conversation also touched upon specific tools and platforms used in virtual production, discussing their capabilities, integration with existing workflows, and the impact of new entries like Chaos software aiming to compete with Unreal Engine.

Miscellaneous: Other topics discussed included the use of pseudonyms for professional purposes, ethical considerations in funding and support from organizations like the BFI, and practical challenges encountered in virtual production setups.

Main

An American living in London expresses affection for Chicago and Wrigley Field, mentioning a desire to revisit.

Another person introduces themselves as a film producer based in Germany, recently starting a new role, and expresses excitement for future exchanges.

A series of users join the group via invite links.

A discussion about a non-VP related admin emerges, followed by a decision to take the conversation offline.

A request for high-quality car models for pre-viz purposes is made, specifically looking for free options but willing to pay. Suggestions include Turbosquid, CGTrader, Sketchfab, and GrabCAD for various models.

An introduction from a new group member working on supporting the entertainment industry's evolution into immersive 3D experiences is shared, mentioning a trial at SXSW.

A conversation about brainstorming 3D and infinity sets takes place, with plans to discuss further.

Information on color management and camera calibration for virtual production is exchanged, including the use of certain tools and platforms for ensuring color accuracy across the production process.

A mention of a color workflow video series and an interview with a director of photography on a specific production is noted, highlighting the ongoing discussion and sharing of resources on virtual production techniques.

A participant enthusiastically rates something as AAAAAA instead of AAA.

Someone seeks advice on using Lightcraft Jetset app for camera tracking in Unreal Engine 5.3, mentioning past struggles with HTC Vive trackers, budget concerns, and specific hardware inquiries including the use of an iPhone and integration with Ninja V and Accsoon SeeMo.

Another mentions the BTS on Disney+ showcasing virtual sets with Stagecraft engine, suggesting high-quality production insights.

A conversation unfolds about using Chaos software for virtual production, discussing technical aspects like 3D rendering, camera tracking, and real-time compositing. There's interest in how these technologies integrate with LED walls and virtual production ecosystems.

The Netflix Validation Framework is introduced, aimed at identifying and addressing issues in virtual production workflows with Unreal Engine, with emphasis on its utility but also its limitations.

Further discussions delve into the technicalities of virtual production, including software capabilities, rendering techniques, material support, and the use of ray tracing.

Questions and insights about real-time rendering, scene modification, and the scalability of rendering processes, especially in relation to distributed and cloud rendering, highlight the evolving landscape of virtual production technologies.

Discussions revolve around the potential and challenges of real-time ray tracing, particularly in the context of virtual production, with particular mention of Project Arena's capacity for removing or adding elements and its integration into existing virtual production (VP) hardware environments.

There's skepticism and curiosity about how new software, specifically referred to as Arena, compares to Unreal Engine in terms of latency, quality, and practical application, including for digital humans.

The conversation touches on the importance of DLSS 3.5 in enhancing speed and quality, offering a more direct route for content delivery to VP stages, potentially saving significant time and resources.

A participant shares a video link to elucidate ray tracing for those unfamiliar with the technology and praises the formation of the discussion group.

One contributor expresses excitement for the emergence of Chaos software as a potential competitor to Unreal Engine, noting its suitability for larger VFX houses and projects, while another seeks technical clarity on latency issues, scene operability, and integration capabilities of Arena software.

A discussion on the efficiencies of cloud rendering versus local rendering unfolds, highlighting the potential cost-effectiveness and performance benefits of cloud services for rendering tasks.

The conversation delves into the technicalities of network latency and cloud rendering capabilities, with contributors debating the practicality and financial sense of cloud rendering services for virtual production and other intensive rendering workflows.

Questions arise regarding the licensing and crediting requirements for using Unreal Engine in projects, with specific guidelines for crediting Epic Games and Unreal Engine highlighted.

Inquiries are made about the possibility of integrating new technologies into the disguise ecosystem, similar to how Unreal is currently utilized.

VP Show and Tell

A study was shared suggesting that human body data utilized by contemporary performance capture systems is flawed.

Various links to videos and professional content were posted, showcasing the technical aspects of virtual production and performance capture, including one that included the technical drawing for a cockpit stage used in a project.

Live tracking using Mosys technology was discussed, alongside a shoot at specific stages, employing two cameras for a mixed virtual production.

Discussions around the updates in Unreal Engine 5.4, specifically the removal of ray-traced reflections and the entire ray-traced module, including GI and translucency, were highlighted, sparking debate on the implications for virtual production quality and workflow.

The conversation also covered the technicalities and preferences of color management in XR set extensions, with a focus on the process of matching camera images to digital outputs and the effectiveness of different LUT generation processes.

A breakdown of a project for Saudi Founding Day was shared, boasting five virtual locations shot in 12 hours, demonstrating the power of Unreal Engine.

The success of various virtual production shoots was celebrated, with participants applauding the color, lighting, and overall quality of the work presented.

The potential of Eyeline volumetric capture technology was discussed, with a specific mention of the use of previsualized scenes.

A discussion on frame rates used in virtual production, specifically the use of 48fps for certain effects, highlighted the technical considerations in achieving high-quality visual effects.

A studio shared their achievement of creating six different locations in one day using Unreal Engine 5.3, expressing satisfaction with the results.

The launch of a new platform, CityBLD, was announced, which generated interest, especially around indie pricing options and the availability of a plan for solos and small developers, illustrating the broader community's interest in accessible virtual production tools and resources.

Events, Training and Work Apps

Inquiry about the Pipeline Conference date was made, and it was mentioned that the organization is still seeking committee members.

A London-based immersive producer/director/artist expressed availability for freelance work in Virtual Production, seeking to gain more hands-on experience in various roles.

An announcement was made about a Virtual Production Artist Residency with the University of Galway offering a budget for practitioners from outside of Ireland to share expertise and encourage collaboration.

Information about the VFX Festival, including early bird discounts and venue details, was shared.

A TEDx event and several job postings related to virtual production were mentioned, including opportunities at On-Set Facilities (OSF) for crew members across various locations.

VP Gathering in Breda, NL, was announced, and a link to a 3D model of a car on LinkedIn was shared for those interested in automotive rendering.

An event at NAB in Las Vegas focusing on virtual production was highlighted, along with a job opening for a Lead Software Engineer specializing in Python, 3D engines, and cloud computing.

Advertisements for graduate roles in AI/ML Engineering and Technical Support Engineering were shared.

Training opportunities in the UK were announced by Stype Academy and Stage Precision, covering core skills and Virtual Production sessions.

A Proof of Concept (POC) shoot at Morden Wolf, open for observation or hands-on participation, was announced.

Digital Human

There was enthusiasm shared for Ace's capabilities in performance capture, as demonstrated in a LinkedIn post.

A demo for testing Dollars Mocap was created, allowing users to experiment with mocap without needing to rig a character first.

Unity's shutdown of Ziva Dynamics was discussed, noting the potential impact on the Ziva team and the virtual production technology landscape. Ziva was compared favorably to MetaHuman in terms of technology and realism.

DNEG's acquisition of an exclusive license to Ziva Technologies was announced, sparking interest in how this might influence future virtual production and animation projects.

Discussions about the capabilities and limitations of various motion capture and animation systems, including GaSp for accurate finger positioning and emotional facial expressions capture, were shared.

Queries about websites offering rigged packs of pedestrians with high levels of detail (LOD) were raised, with suggestions including ActorCore 3D and Reallusion's offerings.

The limitations of MetaHumans in terms of flexibility and the requirement for a solid internet connection were mentioned, alongside the potential of alternative solutions like Reblium.

A discussion about rendering challenges and the potential of Unreal Engine 5.3 for achieving high-quality final sequences, especially with path tracing, took place.

An offer of help from a member of the Reblium team was extended to those with questions about the platform.

The conversation also touched on the challenges of updating projects from Unreal Engine 5.1 to 5.3, with a focus on taking advantage of the updates in the newer engine version.

Location Capture

A link was shared about Lixel L2 LiDAR Gaussian splatting from Xgrids, highlighting advancements in volumetric data processing.

Improvements to image quality and the release of NotchLC versions of all plates were discussed after resolving an issue that made the image softer. A link to access these updated versions was provided.

The key benefits of Native 16K360 imaging were outlined, emphasizing its single point of view capture, small setup without the need for stitching, and the ability to directly view plates from the camera in a VR headset. The source of these images was revealed to be Stockholm, Sweden, specifically captured during the night in a described "home stretch."

Interest in the location of the footage was expressed, acknowledging the appeal of the area and mentioning experiences in Sweden but not in the countryside.

An offer was made to use the footage for testing the splat process, indicating a willingness to share these resources with the community.

An AI renderer for Cinema4D that can transform wireframe/blocking to photo-real material in seconds was highlighted, pointing to the potential efficiencies AI can bring to the rendering process in animation and visual effects.

Back Chat

A discussion unfolded around the development of 3D world generation by Midjourney, with varying opinions on the roadmap and art direction capabilities. The ethical considerations of using such AI tools were debated, highlighting concerns about equity, legality, and intellectual property. The conversation touched on Adobe's ethical approach with Firefly contrasted with Midjourney's approach, and the need for thorough discussion and understanding of AI tools within the community.

A video was shared to provide insight into the issues surrounding AI tools, suggesting a need for careful consideration and dialogue. The acquisition of Ziva Technologies by DNEG sparked interest, particularly regarding how it might affect Unity's licensing terms, especially in relation to compatibility with Unreal Engine.

The conversation veered into the ethical implications of AI, with some participants expressing concern over the rapid adoption of AI without fully considering its impact. Others defended the democratization of creative tools provided by AI, highlighting its potential to make creativity accessible to those traditionally excluded from artistic and filmic expression due to various barriers.

The discussion concluded with a call for a more organized debate on the ethics of AI, recognizing the complexity of the topic and the need for a more structured discussion to explore the various viewpoints and concerns surrounding AI's role in creative industries.

Discussions on the forum highlighted various topics related to AI in creative industries. Concerns were raised about the focus on showcasing AI's capabilities without critically examining its implications, prompting suggestions for a separate channel to discuss such matters.

The ethical considerations surrounding AI use in creative processes were debated, with some participants emphasizing the importance of respect and comprehensive discussion.

The use of pseudonyms in professional settings was shared, highlighting its practicality and advantages in certain industries.

Power requirements for virtual production and location shoots were discussed, providing insights into the rule of thumb for electrical needs based on wall size and level of production.

Developments in AR technology, specifically the Magic Leap 2 XR bundle, were discussed, with participants sharing their anticipation and skepticism about the technology's potential and affordability.

The Unreal Editor for Fortnite (UEFN) was mentioned as a learning opportunity, and a request for connections to Unreal developers was made, focusing on finding employment for student interns skilled in Unreal and Unity.

NVIDIA's blog on Edify 3D generative AI and custom fine-tuning was shared, alongside a LinkedIn post detailing the behind-the-scenes process of a Sora-generated balloon head video, noting the extensive post-production work involved.

A snippet from "The Rest is Entertainment" podcast discussed UK tax cuts for film and VFX, offering insights into the financial aspects of the creative industries in the UK.

A detailed overview of UK's enhanced tax incentives for independent films was shared, including a 40% tax relief for films with budgets up to £15m, a 5% increase in tax credit for visual effects, and a 40% discount on business rates for studio facilities. These measures aim to support smaller productions and make film and high-end TV production more financially viable in the UK.

Discussions about the ethical use of AI in creative processes highlighted concerns over the uncritical celebration of AI advancements without addressing potential problems. A separate channel for more focused discussion on AI ethics was suggested.

Questions were raised regarding the compatibility of Avid Maestro with the latest versions of Unreal Engine, highlighting the practical challenges of integrating new technologies into existing workflows.

The Magic Leap 2 and its potential for augmented reality applications sparked interest, with members sharing their experiences and opinions on the device compared to other options like the Oculus Quest 3.

Networking and job-seeking advice was exchanged, with recommendations for platforms like Talent Manager, Film London, and Equal Access Network. Concerns were voiced about the effectiveness of other networks such as Mandy and the potential misuse of WhatsApp groups in light of ongoing investigations into cartel behavior in media.

The discussion concluded with a note on Vray's intention to compete with Unreal Engine, indicating a growing competition in the virtual production software market.

Comments